Captain Stronk: the Orchestrator with community values and a mission to make the Livepeer network the more robust

![]() In need of open source contributions?

In need of open source contributions?

![]() Looking for a global high performing orchestrator?

Looking for a global high performing orchestrator?

![]() On the hunt for low commission rates?

On the hunt for low commission rates?

![]() Captain Stronk comes to the rescue!

Captain Stronk comes to the rescue!

About Stronk

Hi! I’m a full stack video engineer at OptiMist Video. My main responsibility is working on MistServer, an open source, full-featured, next-generation streaming media toolkit

Besides running this Orchestrator I have a passion for making music, maxing out my level on Steam and cuddling with my cats on the couch

Why stake with Captain Stronk?

- We have been active since December, 2021 and have never missed a reward call

- To support our contributions to the Livepeer ecosystem

- We have a professional setup with monitoring, alerts and high uptime. We have consistently been in the top 10 performers and will scale operations as needed to keep it that way

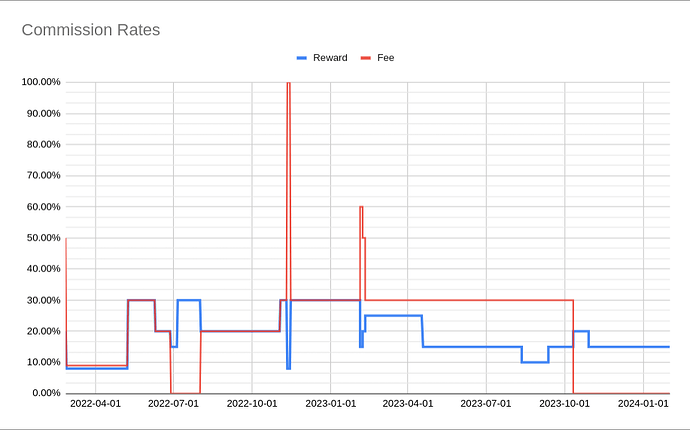

- Our goal is to run at a 8% reward and 30% fee cut. This way our delegators will have high returns, while ensuring we earn enough revenue to fund our operations and community contributions. We will lower our reward commission over time, as our stake increases

In short: we are setting a gold standard in terms of reliability and performance and set an example of how orchestrators can contribute back to the Livepeer ecosystem

Our setup

We are located in Leiden, with a dedicated Livepeer machine and gigabit up/down connection. We are also cooperating with other high performing orchestrators to ensure high availability across the globe while keeping our costs as low as possible.

We have the following GPU’s connected and ready to transcode 24/7:

1x GTX 1070 @ Boston

1x RTX 3080 @ Las Vegas

3x GTX 1070Ti @ Leiden

1x Tesla T4 @ Singapore

Tip: You can visit our public Grafana page for advanced insights

Published Projects

As a part of our mission to make the Livepeer network more robust, we are maintaining the following projects

Tip: Feel free to request custom development work if you have have need of something which can benefit the Livepeer network as a whole

Orchestrator API and supplementary explorer - link

Useful data indexing tool which provides information on events happening on Livepeers smart contracts. The API aggregates data from the Livepeer subgraph, blockchain data, smart contract events and more. This data gets cached so that, even if one of these data sources has issues, the API can always return the latest known info without interruption to service.

Status: Prototype has been running for over a year now. A major refactor is currently in progress, as well as a redesign of the frontend.

The new version will be implemented generically (extensible to other projects than just Livepeer) as well as buffering the state over time (allowing getting the state at a specific point in time)

Stronk Broadcaster - preview

We are working on turning our Livepeer machines into a CDN and provide a simple website where users can trial the Livepeer network. The website allows visitors to stream into the network from the browser and view and share these streams with anyone. It includes basic statistics, like the delay of the transcoded video tracks versus the source track

Status: Global ingest and delivery of live media is enabled. Side-by-side comparison of WebRTC being streamed from the browser versus what the viewers receive is also functional. Next up: Enable streaming through a canvas to enable overlaying video tracks and adding overlays (or other custom artwork)

Dune Livepeer Dashboard - link

Provides statistics on events happening on Livepeers smart contracts

Status: Finished, exploring new stats to add

Orchestrator Linux setup guide - link

Solves the knowledge gap of Windows users who want to improve their setup by running their Orchestrator operations on a Linux machine

Status: First public release. We have received plenty of feedback from orchestrators in order to cover more information

Orchestrator Discovery Tracker - preview

Pretend Broadcaster which does discovery checks to all active Orchestrators and makes the response times publicly available. These are the same requests a go-livepeer Broadcaster makes when populating their list of viable Orchestrators

Status: Prerelease. On hold while we work on above projects

Shoutouts

The Livepeer network has an awesome community of Orchestrators. In case you are not convinced of staking with Captain Stronk yet, the following Orchestrators are also a good spot to stake your LPT:

titan-node.eth: the face of Livepeer and also a public transcoding pool. Hosts a weekly water cooler chat in the Livepeer Discord to talk Livepeer, Web3 or tech in general

video-miner.eth: transcoding pool run by a group of Orchestrators (including myself)

xeenon.eth: a web3 media platform and promising new broadcaster on the network